Security Principles

Dr. Greg Bernstein

March 15th, 2022

Security Principles

References

Introduction to CyBOK Issue 1.0 Section 4 “Principles”.

Systems Security Engineering Considerations for a Multidisciplinary Approach in the Engineering of Trustworthy Secure Systems, NIST SP 800-160v1 November 2016, Appendix F.

Saltzer and Schroeder Principles

Background

The earliest collected design principles for engineering security controls were enumerated by Saltzer and Schroeder in 1975. These were proposed in the context of engineering secure multi-user operating systems supporting confidentiality properties for use in government and military organizations.

Terminology

From Wikipedia: Security Controls

Security controls are safeguards or countermeasures to avoid, detect, counteract, or minimize security risks to physical property, information, computer systems, or other assets. In the field of information security, such controls protect the confidentiality, integrity and availability of information.

Economy of Mechanism

The design of security controls should remain as simple as possible, to ensure high assurance. Simpler designs are easier to reason about formally or informally, to argue correctness. Further, simpler designs have simpler implementations that are easier to manually audit or verify for high assurance.

Example: Economy of Mechanism

This principle underlies the notion of Trusted Computing Base (TCB) — namely the collection of all software and hardware components on which a security mechanism or policy relies. It implies that the TCB of a system should remain small to ensure that it maintain the security properties expected.

Question: Simpler is Better?

Given two “mechanisms” that can perform the same “security job” why would you prefer the simpler of the two?

Example: Trusted Platform Modules (TPM)

From Wikipedia: TPM

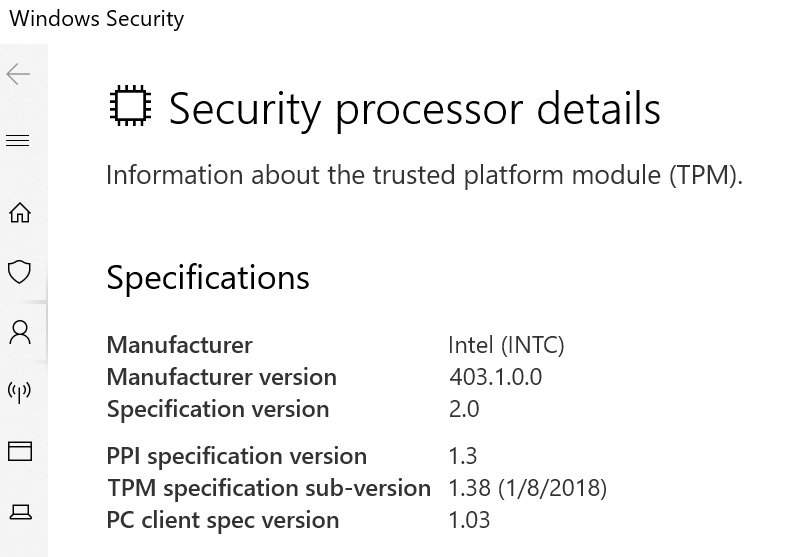

Example: TPM Windows

Fail-safe Defaults

Security controls need to define and enable operations that can positively be identified as being in accordance with a security policy, and reject all others. In particular, Saltzer and Schroeder warn against mechanisms that determine access by attempting to identify and reject malicious behavior.

Fail-Safe Defaults: Discussion

Malicious behavior, as it is under the control of the adversary and will therefore adapt, is difficult to enumerate and identify exhaustively. As a result basing controls on exclusion of detected violation, rather than inclusion of known good behavior, is error prone. It is notable that some modern security controls violate this principle including signature based anti-virus software and intrusion detection.

What’s Wrong with AV and IDS

Does this principle say what it “sounds like”?

Why do the authors claim that AV and IDS systems don’t follow this principle?

Complete Mediation

All operations on all objects in a system should be checked to ensure that they are in accordance with the security policy. Such checks would usually involve ensuring that the subject that initiated the operation is authorized to perform it, presuming a robust mechanism for authentication. However, modern security controls may not base checks on the identity of such a subject but other considerations, such as holding a ‘capability’.

Complete Mediation Questions

In the context of an operating system

- What are the objects they may be concerned with?

- What are the operations they may be concerned with?

- What are the “security policies” that would be enforced?

Open Design

The security of the control must not rely on the secrecy of how it operates, but only on well specified secrets or passwords. This principle underpins cyber security as a field of open study: it allows scholars, engineers, auditors, and regulators to examine how security controls operate to ensure their correctness, or identify flaws, without undermining their security. The opposite approach, often called ‘security by obscurity’, is fragile as it restricts who may audit a security control, and is ineffective against insider threats or controls that can be reverse engineered.

Open Design: Question

Does the “open design” principle say we should reveal all the details about our system?

Separation of Privilege 1

Security controls that rely on multiple subjects to authorize an operation, provide higher assurance than those relying on a single subject. This principle is embodied in traditional banking systems, and carries forward to cyber security controls.

Separation of Privilege 2

However, while it is usually the case that increasing the number of authorities involved in authorizing an operation increases assurance around integrity properties, it usually also decreases assurance around availability properties. The principle also has limits, relating to over diluting responsibility leading to a ‘tragedy of the security commons’ in which no authority has incentives to invest in security assuming the others will.

Banking Example 1

From Safe Deposit Box

Banking Example 2

From Safe Deposit Box

Safe deposit boxes are kept in a secure vault at a bank or credit union branch. Typically, it takes two keys to open a safe deposit box: your key, plus a key that your bank or credit union retains. To access what’s in your safe deposit box, you’ll need to go to the branch, show proof of identity and provide your key. As the owner of its contents, only you, and not the bank, know what’s held inside it. (The bank or credit union does not hold a copy of your personal key; only you do.)

Separation of Privilege Discussion

Separation of privilege in financial transactions

Separation of privilege in legal processes

Separation of privilege and system administration

Separation of privilege in national security

NIST SP 800-172

Enhanced Security Requirements for Protecting Controlled Unclassified Information

“This publication provides federal agencies with recommended enhanced security requirements for protecting the confidentiality of CUI”

NIST 800-172 Access Control 1

3.1.1e Employ dual authorization to execute critical or sensitive system and organizational operations.

NIST 800-172 Access Control 2

Dual authorization, also known as two-person control, reduces risk related to insider threats. Dual authorization requires the approval of two authorized individuals to execute certain commands, actions, or functions. For example, organizations employ dual authorization to help ensure that changes to selected system components (i.e., hardware, software, and firmware) or information cannot occur unless two qualified individuals approve and implement such changes.

NIST 800-172 Access Control 3

These individuals possess the skills and expertise to determine if the proposed changes are correct implementations of the approved changes, and they are also accountable for those changes. Another example is employing dual authorization for the execution of privileged commands. To reduce the risk of collusion, organizations consider rotating assigned dual authorization duties to reduce the risk of an insider threat. Dual authorization can be implemented via either technical or procedural measures and can be carried out sequentially or in parallel.

Least privilege 1

Subjects and the operations they perform in a system should be performed using the fewest possible privileges. For example, if an operation needs to only read some information, it should not also be granted the privileges to write or delete this information.

Least privilege 2

Granting the minimum set of privileges ensures that, if the subject is corrupt or software incorrect, the damage they may do to the security properties of the system is diminished. Defining security architectures heavily relies on this principle, and consists of separating large systems into components, each with the least privileges possible — to ensure that partial compromises cannot affect, or have a minimal effect on, the overall security properties of a whole system.

Least Privilege: Root versus Sudo 1

From Help Ubuntu

In Linux (and Unix in general), there is a SuperUser named root. The Windows equivalent of root is the Administrators group. The SuperUser can do anything and everything, and thus doing daily work as the SuperUser can be dangerous. You could type a command incorrectly and destroy the system. Ideally, you run as a user that has only the privileges needed for the task at hand. In some cases, this is necessarily root, but most of the time it is a regular user.

Least Privilege: Root versus Sudo 2

From Ask Ubuntu

sudo lets you run with root privileges and times out, root stays on as long as root is logged in. For security purposes, sudo is better.

Least Common Mechanism 1

It is preferable to minimize sharing of resources and system mechanisms between different parties. This principle is heavily influenced by the context of engineering secure multi-user systems. In such systems common mechanisms (such as shared memory, disk, CPU, etc.) are vectors for potential leaks of confidential information from one user to the other, as well as potential interference from one user into the operations of another.

Least common mechanism 2

Data Center Example 1

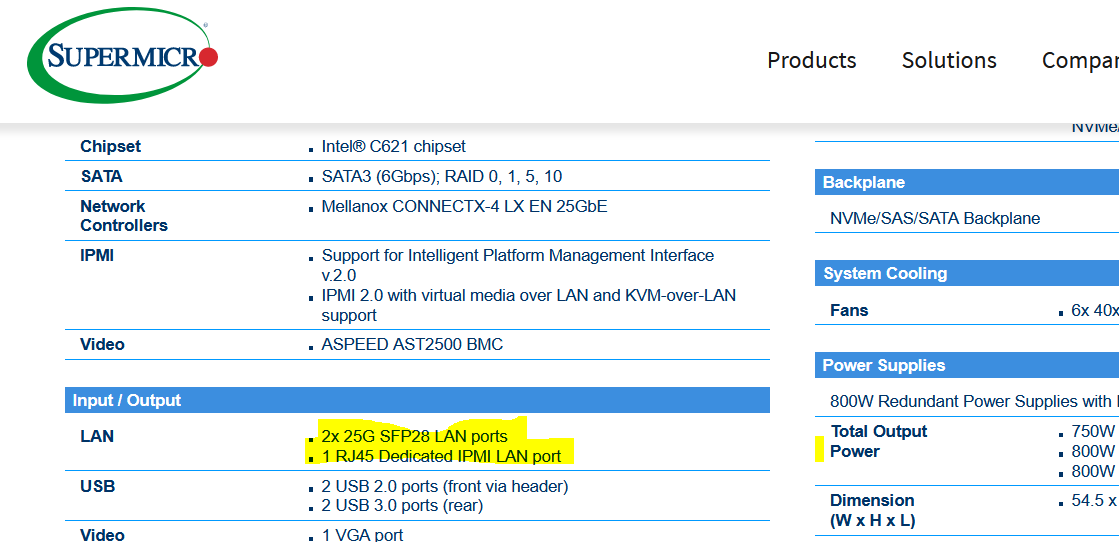

Data center server example SuperMicro

Data Center Example 2

This server has:

- Two 25 Gbps optical (Ethernet) LAN interfaces

- One RJ45 electrical LAN interface (1Gbps or less) for IPMI

- Why bother with the low speed interface?

Data Center IPMI

From Wikipedia: IPMI

The Intelligent Platform Management Interface (IPMI) is a set of computer interface specifications for an autonomous computer subsystem that provides management and monitoring capabilities independently of the host system’s CPU, firmware (BIOS or UEFI) and operating system. IPMI defines a set of interfaces used by system administrators for out-of-band management of computer systems and monitoring of their operation. For example, IPMI provides a way to manage a computer that may be powered off or otherwise unresponsive by using a network connection to the hardware rather than to an operating system or login shell.

Data Center Example 3

Least common mechanism the management of the servers in large data centers takes place over a separate network from the high speed user traffic network! These use separate physical interfaces on the servers.

Psychological acceptability

The security control should be naturally usable so that users ‘routinely and automatically’ apply the protection. Saltzer and Schroeder, specifically state that ‘to the extent that the user’s mental image of his protection goals matches the mechanisms he must use, mistakes will be minimized’.

What is Acceptable to You?

- Frequently require password changes

- Passwords requiring weird characters

- Multi-factor authentication

- Separate passwords per account

Work factor

Good security controls require more resources to circumvent than those available to the adversary. In some cases, such as the cost of brute forcing a key, the work factor may be computed and designers can be assured that adversaries cannot be sufficiently endowed to try them all. For other controls, however, this work factor is harder to compute accurately. For example, it is hard to estimate the cost of a corrupt insider, or the cost of finding a bug in software.

Work Factor Example

- Dictionary attacks to “crack” passwords are very common, the main way to counter this is to increase the “work”

- First passwords need to be “salted”

- Then appropriate algorithms with scalable work factors need to be used.

- See Password Hashing Competition and Wikipedia: Argon2

Compromise Recording

It is sometimes suggested that reliable records or logs, that allow detection of a compromise, may be used instead of controls that prevent a compromise. Most systems do log security events, and security operations heavily rely on such reliable logs to detect intrusions. The relative merits — and costs — of the two approaches are highly context-dependent.

NIST Principles

Overview

From NIST SP800-160v1 Appendix F

Security design principles and concepts serve as the foundation for engineering trustworthy secure systems, including their constituent subsystems and components. These principles and concepts represent research, development, and application experience starting with the early incorporation of security mechanisms for trusted operating systems, to today’s wide variety of fully networked, distributed, mobile, and virtual computing components, environments, and systems.

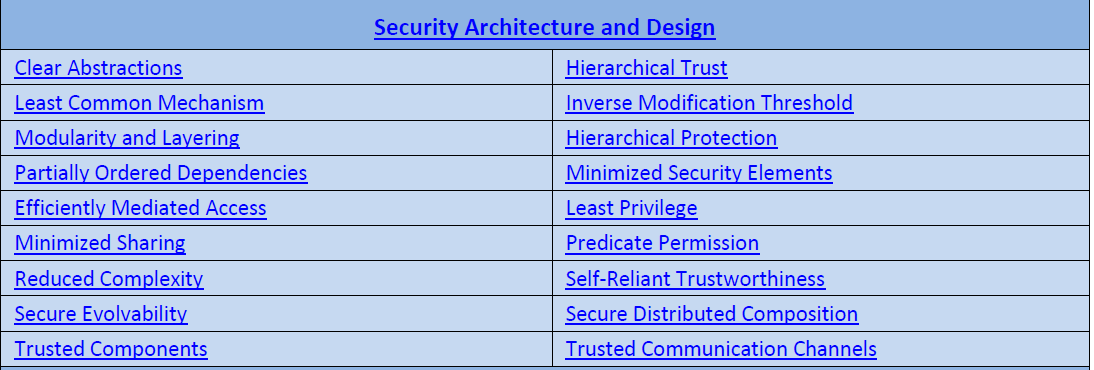

Security Architecture and Design

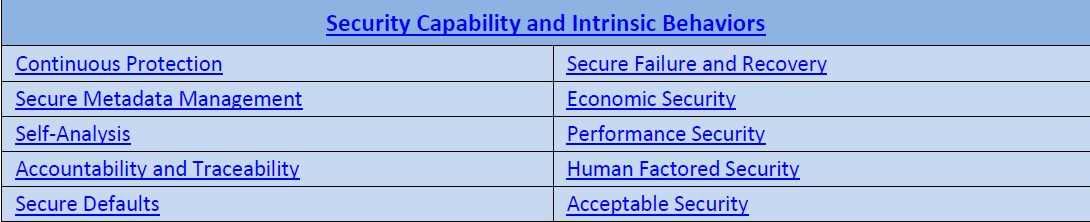

Security Capability and Intrinsic Behaviors

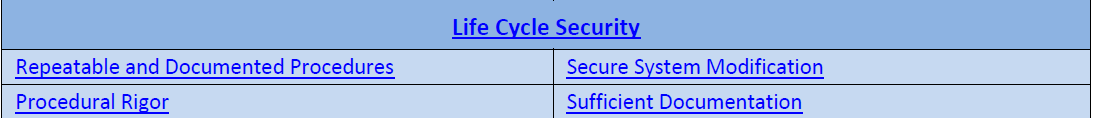

Life Cycle Security

NIST Approaches

Reference Monitor Concept NIST 1

The reference monitor concept provides an abstract security model of the properties that must be achieved by any system mechanism claiming to securely enforce access controls.

Reference Monitor Concept NIST 2

The abstract instantiation of the reference monitor concept is an “ideal mechanism” characterized by three properties:

the mechanism is tamper-proof (i.e., it is protected from modification so that it always is capable of enforcing the intended access control policy);

the mechanism is always invoked (i.e., it cannot be bypassed so that every access to the resources it protects is mediated);

Reference Monitor Concept NIST 3

- and the mechanism can be subjected to analysis and testing to assure that it is correct (i.e., it is possible to validate that the mechanism faithfully enforces the intended security policy and that it is correctly implemented).

Reference Monitor Example OS 1

From Wikipedia: Reference Monitor Concept

In operating systems architecture a reference monitor concept defines a set of design requirements on a reference validation mechanism, which enforces an access control policy over subjects’ (e.g., processes and users) ability to perform operations (e.g., read and write) on objects (e.g., files and sockets) on a system. The properties of a reference monitor are captured by the acronym NEAT, which means:

Reference Monitor Example OS 2

From Wikipedia: Reference Monitor Concept

The reference validation mechanism must be Non-bypassable, so that an attacker cannot bypass the mechanism and violate the security policy.

The reference validation mechanism must be Evaluable, i.e., amenable to analysis and tests, the completeness of which can be assured (verifiable). Without this property, the mechanism might be flawed in such a way that the security policy is not enforced.

Reference Monitor Example OS 3

From Wikipedia: Reference Monitor Concept

The reference validation mechanism must be Always invoked. Without this property, it is possible for the mechanism to not perform when intended, allowing an attacker to violate the security policy.

The reference validation mechanism must be Tamper-proof. Without this property, an attacker can undermine the mechanism itself and hence violate the security policy.

Applying the Reference Monitor Concept

What does this mean in general?

Reference: noun “The act of referring to something.” Interpret this as getting access to some object or resource.

Monitor: intransitive verb “To keep close watch over; supervise.” Interpret this as controlling access to some object

Reference Monitor: Something to supervise references (accesses) to objects/resources.

Common Programming Situation: Web APIs

- Every access to a web API should be checked

- Is the user authenticated?

- Is the user allowed to use the API?

- Good Web server frameworks make this relatively straight forward

Defense in Depth NIST

Defense in depth describes security architectures constructed through the application of multiple mechanisms to create a series of barriers to prevent, delay, or deter an attack by an adversary. The application of some security components in a defense in depth strategy may increase assurance, but there is no theoretical basis to assume that defense in depth alone could achieve a level of trustworthiness greater than that of the individual security components used. That is, a defense in depth strategy is not a substitute for or equivalent to a sound security architecture and design that leverages a balanced application of security concepts and design principles.

Defense in Depth Wikipedia

Defense in depth is a concept used in Information security in which multiple layers of security controls (defense) are placed throughout an information technology (IT) system. Its intent is to provide redundancy in the event a security control fails or a vulnerability is exploited that can cover aspects of personnel, procedural, technical and physical security for the duration of the system’s life cycle.

Defense in Depth: Home Network

- House with doors and locks (Physical security)

- Router with Firewall

- Computer with internal Firewall

- Computer/Cell Phone/Tablet with login (password and/or biometric)

- Applications with additional

Isolation

From NIST SP800-160 Appendix F

Two forms of isolation are available to system security engineers: logical isolation and physical isolation. The former requires the use of underlying trustworthy mechanisms to create isolated processing environments. These can be constructed so that resource sharing among environments is minimized. Their utility can be realized in situations in which virtualized environments are sufficient to satisfy computing requirements. In other situations, the isolation mechanism can be constructed to permit sharing of resources, but under the control and mediation of the underlying security mechanisms, thus avoiding blatant violations of security policy.

Logical Compute Isolation Examples

- Separate compute processes

- Separate compute containers

- Separate virtual machines

Logical Network Isolation Examples

- Virtual LANs

- MPLS label switched paths (LSPs)

- Separate Autonomous Systems (AS) – IP inter-domain routing

Physical Isolation Examples

- Separate compute cores on a processor chip

- Separate processor chips on a server motherboard

- Out of band management networks

- Separate fiber optic wavelengths, separate fiber optic cables